Since the creation of the first computers in the 1950s, Information Technology (IT) and Software Development have been evolving at a rapid pace. Today, these technologies are fundamental to the operation of most businesses and organisations around the world. From the first FORTRAN programming language to the latest innovations in artificial intelligence and quantum computing, the evolution of IT has changed the way we live, work and interact with each other.

The 1950s

The 1950s saw the appearance of the first computers and the creation, as mentioned in the introduction, of the FORTRAN (Formula Translation) program. It was developed by IBM to enable programmers to write complex mathematical formulae for use in scientific research and engineering.

This was the first high-performance programming language, as the instructions were written in a language closer to humans, rather than a more robotic language. This allowed programmers to write code faster and with fewer errors than previous low-level languages. FORTRAN became the most widely used programming language in the 1950s and is still used today in some scientific and engineering applications.

In addition, in the 1950s, the first commercial computers emerged, such as the UNIVAC (Universal Automatic Computer) and the IBM 701. These machines were huge in size and very expensive, but they represented a breakthrough in terms of data processing capability. They were mainly used for scientific and military calculations, but they laid the foundations for the coming digital revolution.

The 1960s

Major developments in software development and information technology occurred throughout the 1960s.

It saw the invention of the first operating system (OS/360), the release of the BASIC programming language and significant improvements in data storage capacity and processing speed.

Random access memory (RAM) was developed, allowing computers to store and access data faster and more efficiently.

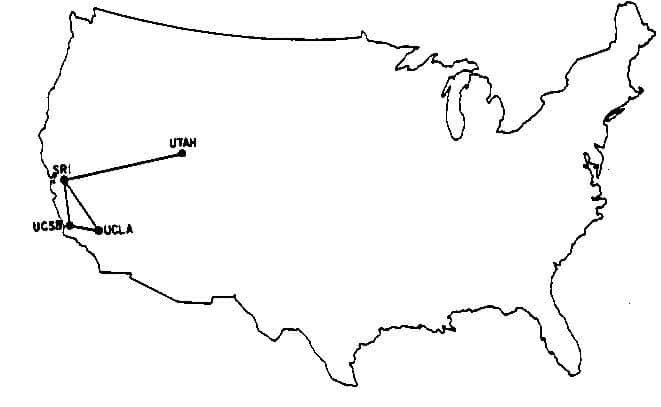

There were also major advances in computer networking, laying the foundations for the later development of the Internet. One of the earliest examples was the creation of the ARPANET, a computer network funded by the US Department of Defence.

The 1970s

In this decade, the first personal computer, the Altair 8800, was introduced and became a big hit with technology enthusiasts. It also saw the development of the C programming language, which became one of the most popular and widely used programming languages worldwide.

Another important breakthrough in the 1970s was the development of floppy disk storage technology, also known as “floppy disks”. These disks allowed users to store and transfer data more easily and efficiently than previous methods, such as open reel tapes.

Additionally the 1970s saw major advances in computer networking, with the development of the TCP/IP protocol, which allowed computers to communicate with each other on a global network. This breakthrough laid the foundation for the later development of the Internet and the World Wide Web.

The 1980s

During the 1980s, information technology and software development continued to evolve at a rapid pace. The decade saw important developments in the software industry, such as the release of Microsoft Windows and the appearance of the first version of the open source Unix operating system.

Furthermore, the 1980s saw a major revolution in the entertainment industry with the advent of CD-ROM technology, which allowed software developers to create and distribute multimedia content, such as games and educational programmes, on a single disc.

There were also major advances in computer networking technology in the 1980s, with the development of the Ethernet protocol and the emergence of wireless networking technology. These advances allowed for greater connectivity and better communication between computers and devices on a network.

The 1990s

There were major advances in network connectivity and online communication, leading to the emergence of the Internet as a vital tool for communication and information sharing.

The development of World Wide Web technology and the emergence of web browsers such as Netscape Navigator and Microsoft Internet Explorer allowed users to access and share information online in an unprecedented way. This led to an explosion of growth in the information technology and software development industry.

The 1990s also saw a major revolution in the entertainment industry with the emergence of DVD technology, which allowed software developers to create and distribute high-quality multimedia content on a single disc. It also saw the launch of online video games and the emergence of 3D graphics technology.

The 2000s

The 2000s witnessed a rapid advance in information technology and software development, leading to major changes in the way people interact with technology.

The rise of mobile technology led to the emergence of the first smartphones, such as the BlackBerry and the Apple iPhone. These devices allowed users to access the internet and perform a wide range of tasks online, from email to web browsing and social networking applications.

Cloud technology also became a major force in the software industry in the 2000s, with the emergence of services such as Amazon Web Services and Google Cloud Platform that allow companies to host applications and store data on remote servers.

Another important development in the 2000s was the emergence of artificial intelligence and machine learning technology, which has transformed the way data is processed and analysed. These technologies have driven the development of virtual assistance systems, chatbots and customer service bots.

The 2010s

The 2010s was a period of significant change in information technology and software development, with the emergence of new technologies that transformed the way people interact with technology and with each other.

Mobile technology continued to evolve with the emergence of the first smartphones with touch screens and the growing popularity of mobile applications. Social networking also became a major force, with Facebook reaching over 1 billion users in 2012.

Another important development in the 2010s was the development of artificial intelligence technology, which was increasingly used in a wide range of applications, from virtual assistants to business process automation. There was also a revolution in augmented and virtual reality technology, with the emergence of devices such as Oculus Rift and Microsoft HoloLens.

And there were growing concerns about online privacy and security, with a number of major security breaches affecting businesses and governments. This led to increased regulation in the IT industry and a greater focus on protecting users’ privacy.

Current IT and Software Development

In conclusion, the evolution of information technology and software development has been impressive in the last decades. From the first computers to the present day, technology has changed the way we interact with the world. Businesses and individuals can now do things that would never have been possible before.

Mobile technology and artificial intelligence are transforming the way business is done and data is accessed. Cloud technology has made access to services easier and more efficient than ever before. However, online privacy and security remain major concerns that need to be addressed.